Unveiling the Veil: Navigating Bias in the Realm of AI

By Simon

Navigating the complexities of generative AI usage in marketing can be challenging due to its susceptibility to biases. This hesitance from professionals across the marketing, sales, and customer service sectors stems from the AI’s occasional tendency to deliver skewed information.

Concerns about AI’s propensity for bias are well-founded, but what causes this bias? This article will explore the inherent risks of AI use, real-life instances of AI bias, and potential strategies for mitigating such adverse impacts.

Special Report: Current Trends in Artificial Intelligence – 2023 Unraveling AI Bias AI bias is essentially the propensity of machine learning algorithms to harbor bias during their programmed operations such as data analysis or content creation. Typically, these biases can reinforce harmful stereotypes, such as those related to race and gender.

The Artificial Intelligence Index Report of 2023 indicates that bias in AI is reflected in outputs that further stereotypes detrimental to specific groups. Conversely, an AI is deemed fair when its predictions or outputs do not discriminate or favor any particular group.

Apart from fostering prejudice and stereotypical beliefs, AI bias can also occur due to:

- Sample selection bias, wherein the data used by the AI isn’t representative of the entire population, leading to predictions and recommendations that can’t be applied universally.

- Measurement bias, which arises when the data collection process is skewed, causing the AI to draw biased conclusions.

The Connection between AI Bias and Societal Bias AI bias is a mirror of societal bias.

The data used to train AI is often riddled with societal prejudices and biases, leading to a reflection of these biases in AI outputs. For instance, an image generator tasked with creating a picture of a CEO may default to generating images of white males, a reflection of historical bias in employment data.

As AI becomes more pervasive, there is growing apprehension about its potential to magnify existing societal biases, causing harm to diverse groups of people.

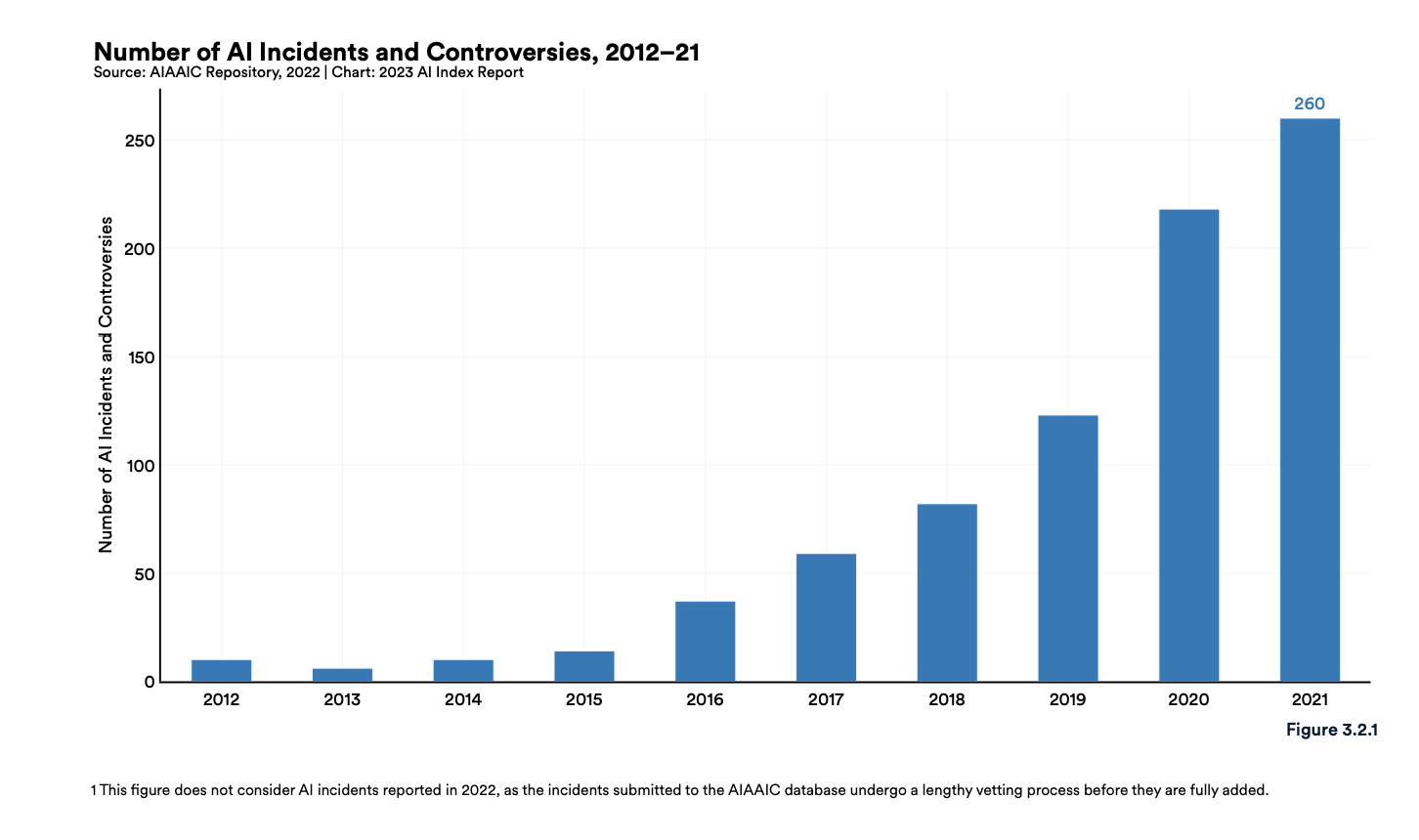

Noteworthy Instances of AI Bias The AI, Algorithmic, and Automation Incidents Controversies Repository (AIAAIC) states that the instances of reported AI controversies and incidents in 2021 were 26 times more than in 2012.

Let’s examine a few instances of AI bias.

Mortgage approval rates stand out as a significant example of AI prejudice. Algorithms have been identified as being 40-80% more likely to reject loan applications from people of color. This bias stems from historical lending data, which shows minorities being denied loans more frequently, teaching the AI to mirror this bias.

Medical diagnoses can also be skewed by sample size bias. For instance, if a physician predominantly treats White patients and relies on AI to analyze patient data and suggest treatment plans, these recommendations may not apply to a more diverse population.

Several enterprises have faced real-world bias due to their algorithms, or have found their algorithms amplifying the potential for bias. Here are a few:

- Amazon’s Recruitment Algorithm: Amazon created an algorithm trained on a decade’s worth of employment data. However, since the data reflected a male-dominated workforce, the algorithm showed bias against female applicants and penalized resumes that included the word “women(‘s).”

- Twitter’s Image Cropping Issue: A 2020 tweet went viral, highlighting Twitter’s algorithm favoring White faces over Black ones in image cropping. Twitter admitted to the bias in its algorithm, stating that while their analyses did not indicate racial or gender bias, they recognized the potential for harm and admitted the need for better foresight in product design and development.

- Biased Facial Recognition by Robots: A study involving robots categorizing individuals based on facial scans revealed inherent bias. The robots were more likely to identify women as homemakers, Black men as criminals, Latino men as janitors, and less likely to identify women of all ethnicities as doctors.

- Intel and Classroom Technology’s Monitoring Software: Intel and Classroom Technology’s software, Class, features facial recognition to gauge students’ emotions while learning. Given the varying cultural norms for expressing emotions, this could lead to misinterpretation of students’ emotional states.

Tackling AI Bias With AI’s bias manifesting in various ways in real-life, AI ethics is gaining prominence.

AI’s potential for spreading harmful misinformation, like deepfakes, and generating factually incorrect information, necessitates a solution for controlling and minimizing AI bias. Strategies for managing AI and minimizing potential bias include:

- Human oversight: Regular monitoring of AI outputs, data analysis, and corrective measures when bias is detected.

- Evaluating the potential for bias: Certain AI use cases have a higher risk of prejudice. It’s essential to assess the possibility of AI outputs being biased.

- Prioritizing AI ethics: Continued research and focus on AI ethics is crucial to devise strategies to minimize AI bias.

- Diversifying AI: Including diverse perspectives in AI can lead to more unbiased practices.

- Acknowledging human bias: Recognizing personal biases can help ensure that they don’t feed into the AI.

- Transparency: It’s vital to communicate clearly about AI usage, for instance, mentioning when an article is AI-generated.

Despite the potential risks, responsible usage of AI is possible. As interest in AI continues to grow, the key to minimizing harm lies in understanding the potential for bias and taking action to prevent it from amplifying societal prejudices.